At AE, we have set up a 'garage' initiative as a community for working with emerging technology. Luckily for us, it’s not a boring empty box for cars to park, but a creative space with all kinds of hardware to experiment with. One of the goals with this garage initiative is to gain hands-on experience with new tech, like computer vision with neural networks for example. An important aspect of this is that it is an open community for all colleagues to collaborate and elaborate on ideas. So, the myth of the AE Garage begins!

As a data scientist at AE, with a considerable amount of interest in computer vision and machine learning, the idea of doing something with both things is obvious. Best thing, I’m not the only one at work with the same interest. After some discussion with a few colleagues, we ended up with our very first project ‘The Parking Vision Project’. The idea is to provide a chatbot that counts the number of free parking spots at our highly overcrowded parking. My colleague Alex Wauters wrote here on the technical aspects of getting it to run end-to-end. In this blog post, I will elaborate on the computer vision with neural networks learnings.

It’s still a work in progress, and we aim to be able to share more learnings in the months to come. Various data scientists from AE will contribute to this project in order to make progress, but above all to gain hands-on experience in a fun and challenging way.

This is what we’ve learned so far.

# 1 - Re-use state-of-the-art

One of the crucial areas in computer vision is object detection and recognition and it’s a rapidly evolving technology. Because of this we fortunately do not have to reinvent everything about how object detection can be done based on images or video. After exploring convolutional neural networks (CNN) for a while, we decided to use this kind of classification model for detecting, recognizing and thus counting cars for our use case.

There are several pre-trained CNN models available, and it may not immediately be obvious which one to pick based on your use case. There’s YOLOv1 v2 v3, Fast or Faster R-CNN, Retina Net, SSD and etc. We decided to go with an off-the shelf implementation of Mask R-CNN trained on the COCO dataset, which already have the output of all vehicle class labels. As said earlier, there is absolutely no reason for us to rebuild it from scratch even if we need to tweak (fine-tune) the model a little bit to get a better fit for our use case. Besides, there is enough information available on the web on how to do feature extraction of those models or how to fine-tune a pre-trained model for a different task than it was originally trained for. I would suggest that you read some articles on pyimagesearch.com if you would like to know more about the fine-tuning process.

# 2 - Iterate over and over again

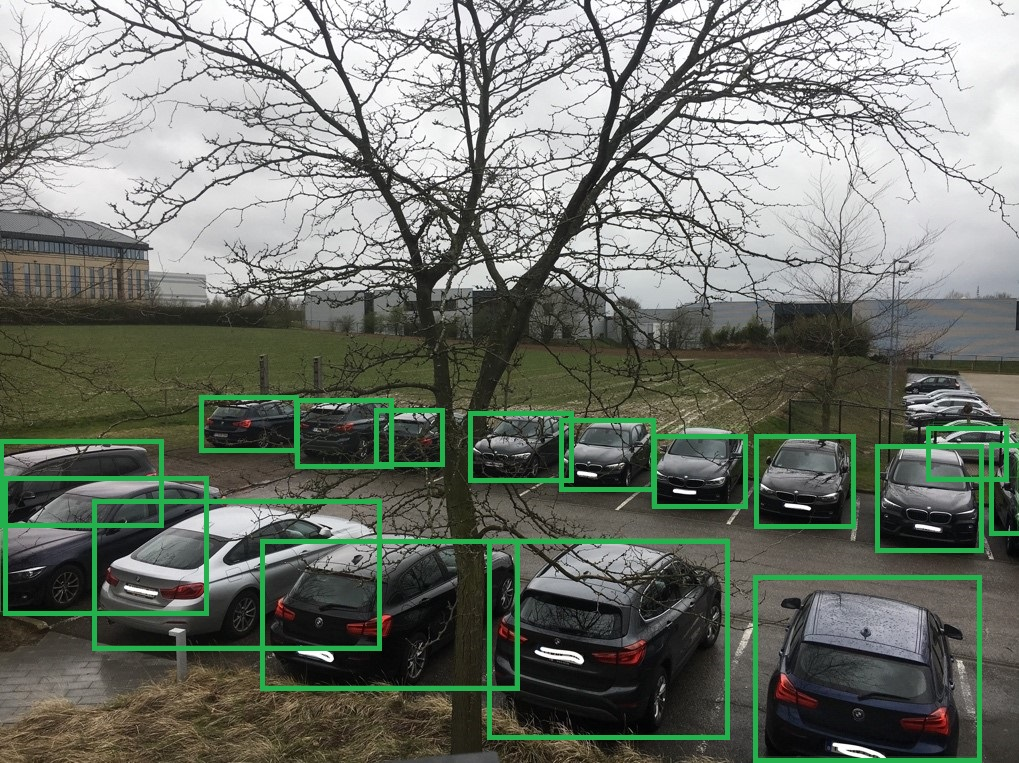

The most important thing is to have a first complete run of the process as quickly as possible: provide images of the parking lot, apply our CNN classification model, make evaluation and iteratively improve over and over again. Without too much pre-processing, apart from some image resizing, we fed our photos of the 5 different camera images to our model, with a working result of course, but still some conclusions or problems that we can deduct from this.

First Results Car Detection

# 3 - Counting cars twice

The camera images have too much overlap, so we do not count all the distinct cars present in the parking lot and double counting we obviously do not want… Also, another parking lot is next to ours, so we are also counting those cars. We kept a list of problems and we have chosen the most pragmatic solution to get results quickly again. We leave the other issues on our backlog for the later refinement of the process.

A possible solution to tackle the problem of double counting is image stitching which is similar like making a panoramic picture.

#Stitching images

We could try stitching the camera images together so that it becomes one or more continuous images such as the example below (for illustration). The main requirement here is that it highly depends on collecting the right key-points in both images.

(source: pyimagesearch.com)

Can we apply this logic to our images? On the left we have camera 1 and right is the image of camera 2, whereas camera 2 partly overlaps with the image of camera 1, only from a completely different angle. We suspected that this solution would be quite a lost effort as finding the key-points will be pretty difficult anyway.

Left: output camera 1 // Right: output camera 2

# Cropping images

Another way to eliminating the double counting and also excluding adjacent parking places is by hard coding the right region of interest (ROI). One can do this by extracting the right region, rescaling the image to the right input format for the CNN model. However, it turns out to be much faster by eliminating the inverse ROI with some openCV functionality. This also eliminates the part of the resizing part, so a win for us to once again achieve results as quickly as possible and learn from them.

So, we have our original image of camera 1 again (this time from another timestamp):

Image from Camera 1

We eliminate the inverse of our ROI by filling it in with black. At the moment this seems to be a good solution because our cameras have fixed angles and will not change positions that often… well actually never.

Pre-processed Image from Camera 1

#4 - Car detection is not flawless

There is still a wide variation in the correct classification of cars due to a number of external factors. For example, which season it is. In the winter it will be easier to detect cars because there is little or no visual obstruction of the trees around the parking lot. In the summer there will be a lot of visibility obstruction due to the trees but also more reflection of the sun on cars making the detection/classification of cars more difficult. Also, the time of the day relates to the position of the sun, thus also impacting our prediction performance. E.g. in the morning or in the evening when there is clearly less light the detection of cars is extra difficult again. These are still things we need to tackle, but for now it’s good to have those issues on or backlog.

Car Detection on Image from Camera 1

# 5 - Slow classification process

Another problem is the model itself. We use quite a heavy model that can actually predict more than 80 labels. We don’t need this multi-class model and would better use a lighter model that we can fine-tune to our use case. The fine-tuning part means that we have the model partly retrained on a subset of data in combination to reduce the classification problem to a binary classification problem instead of a multi-classification (80 labels). There exists several interesting datasets about cars, like cnrpark, so we’ll definitely want try retraining (a part of) our model on this dataset.

# 6 - Lessons learned

Although we are achieving results as quick as possible and very pragmatically, we are nevertheless able to map out a number of major problems. Things that we had not initially thought of will always pop up. These are the more complex problems that we now try to solve one by one.

If you have any suggestions on how to improve the set-up, let us know! If you need some help getting started with emerging technologies at your company, please don't hesitate to reach out.